AI Risk Management: Managing the Top Sources of AI Risk

Explore AI risks, from cybersecurity to bias & IP. Dive into top strategies to manage these risks effectively.

AI Risk Management: Managing the Top Sources of AI Risk

While much of the broad conversation about AI risk seems to focus on big, existential risks like killer robots, superintelligence, and mass job depletion, the reality for most operational and legal leaders seems much more tangible. Their questions are much closer to real life:

- "I don’t think our AI tech is biased, but how much of my runway could be eaten up if we get sued?"

- "Is my insurance policy broad enough to include mistakes made by our LLM-powered tool?"

- "We’re not directly ripping off other people’s IP, but could I be named in a lawsuit if the foundational model we’ve built on is?"

These questions are not just hypotheticals; they're reflective of the very real anxieties facing companies as they venture into the largely uncharted territory of AI. With each question, the underlying theme is clear: How do we harness the monumental potential of AI while effectively managing the risks?

Why risk management in AI matters

The use of AI continues to grow over a wide array of industries. From customer care to sales, healthtech to fintech, the genie is out of the bottle.

If you’re trying to stay competitive, there’s really no avoiding AI – and yet, the risks are very unavoidable too:

- AI product errors. Systems make mistakes due to limitations in training data or overfitting, which can expose companies to liabilities, especially in sectors like healthcare or finance.

- AI bias. Algorithms can perpetuate biases, leading to discrimination in hiring, lending, and other areas, potentially resulting in lawsuits and reputational damage.

- Violation of AI regulations. Legislation around AI is evolving rapidly, and organizations may unknowingly breach laws designed to reduce bias or increase transparency.

- Intellectual property infringement. AI tools, such as those for generating text or images, may infringe on copyrighted material, exposing companies to costly lawsuits.

Legal realities: the AI risk landscape

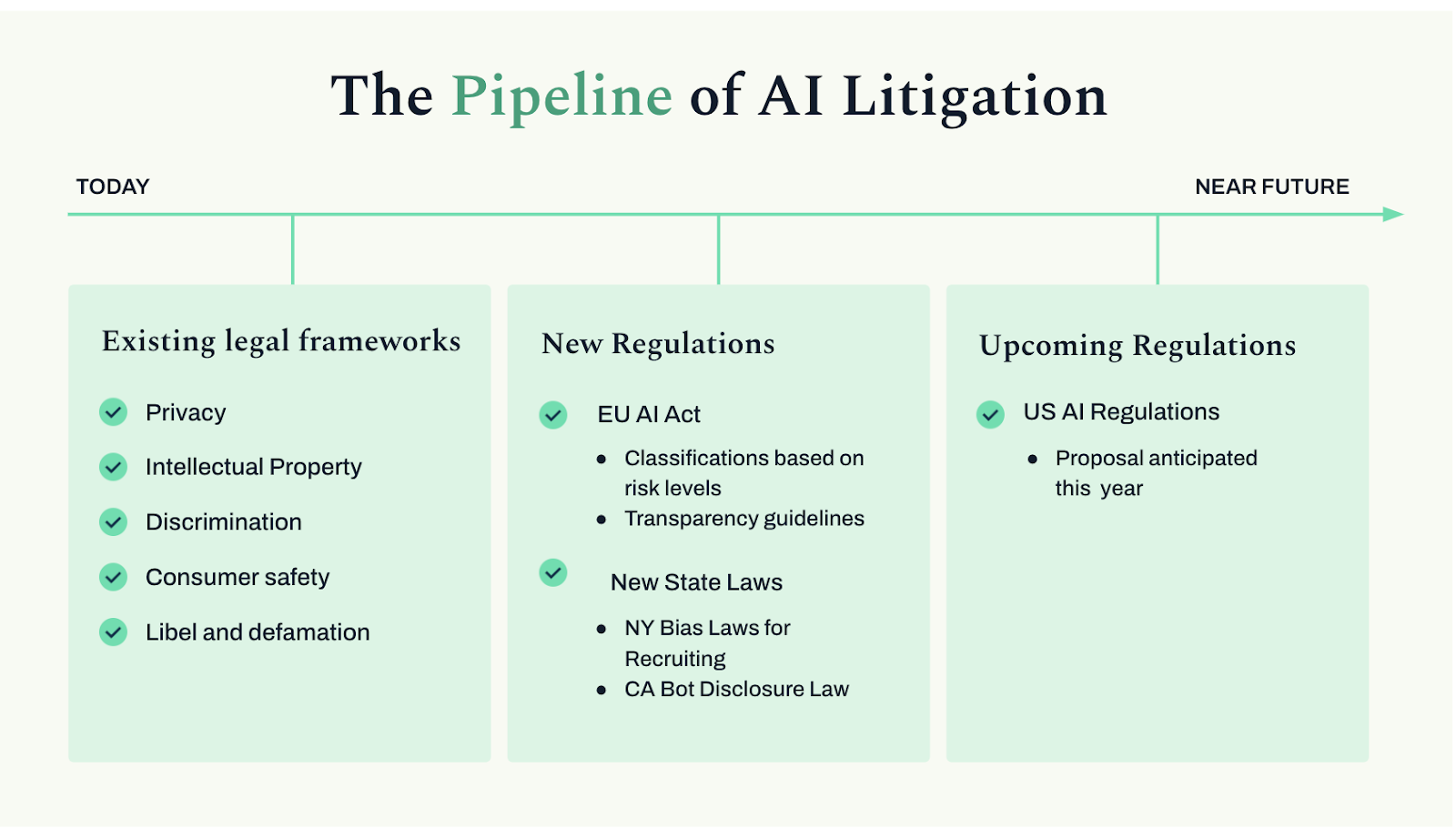

Today, much of the litigation around AI arises entirely from existing legal frameworks that you may already be familiar with: laws related to privacy, intellectual property, discrimination, consumer safety, and libel. While these laws are not new, the introduction of AI can amplify the frequency and severity of related litigation.

Yet, it's not just the current legal frameworks that demand our attention. The horizon is changing. The EU's AI Act, which has been passed and is set for implementation by 2026, is a harbinger of more structured AI governance in the near future. Similar regulatory efforts are underway in the U.S., signaling a shift toward a future of more stringent AI oversight. While these changes loom in the distance, they are vital considerations for your long-term risk management strategies.

The top sources of AI risk

None of these AI risks can ever be completely avoided. So the only option is to carefully identify and manage them. That’s why AI risk management frameworks exist.

In this section, we’ll explain essential categories of AI risk. Later in this post we’ll look at management strategies for each one.

Cyber and Privacy

Though the cyber threats aren’t necessarily different, they are more severe for companies using AI because the amount of data is much greater and is often sensitive. The sheer volume of data processed by AI systems elevates the risk profile significantly. Cyber breaches or privacy infractions can lead to hefty financial penalties and lasting reputational harm.

Additionally, the question of privacy becomes more complex when introducing data into training models. Can a model ever truly “forget” data? How does this align with or contradict current privacy laws demanding data erasure?

Particular sectors stand out for their vulnerability, mostly due to the nature of data they process:

- Healthcare: Handling vast quantities of PHI, healthcare entities must adhere to the rigorous standards set by HIPAA.

- Fintech: With inherently sensitive financial data, fintech companies are bound by the FCRA's regulations.

- Consumer and Martech: Organizations that collect, analyze, and use vast amounts of consumer data for marketing purposes face heightened scrutiny under privacy laws such as GDPR in Europe and CCPA in California.

- EdTech: With the rise of online learning platforms, EdTech companies handle a significant amount of student data. These companies must comply with the Family Educational Rights and Privacy Act (FERPA) in the U.S.

- Biometric Data Handlers: Those collecting biometric data should be especially cognizant of legislation like BIPA, which includes applications such as facial recognition and fingerprint analysis.

IP

IP risk in AI encompasses several critical areas: copyright infringement, patent disputes, and the unauthorized use of proprietary datasets. AI's ability to process, replicate, and sometimes enhance human-generated content raises unique legal questions. Outputs from AI systems, whether text, imagery, or music, derived from extensive datasets, may unintentionally infringe on existing copyrights or patents.

A pivotal aspect of this debate revolves around unresolved legal questions. Many ongoing legal disputes leave the application of the Fair Use doctrine and the legality of data scraping in AI's context in flux. As these cases progress through the courts, we anticipate more clarity on the boundaries of IP risk in AI.

Certain applications are particularly vulnerable to IP risks due to their reliance on extensive datasets:

- General Large Language Models (“LLMs”): Central to many AI innovations, LLMs are under significant scrutiny. Their capability to generate human-like text may inadvertently replicate copyrighted material.

- Media Generators: Tools producing art, music, and video content are transforming creative industries. Yet, their potential to create works resembling copyrighted materials presents pressing IP concerns.

- Coding Tools: Assistants that aid in coding or optimizing code might unintentionally use proprietary code snippets or algorithms, posing risks of copyright and patent infringements.

- Data Analytics Platforms: These platforms interpret vast datasets, which may include sensitive or proprietary information, risking unintentional IP misuse or disclosure.

- Automated Content Aggregators: Curating content from various sources without proper licensing or attribution could infringe upon the copyrights of original creators.

- Chatbots and Virtual Assistants: These technologies might generate responses that inadvertently mimic copyrighted content, challenging IP integrity maintenance.

- Educational and Training Tools: Offering courses or training materials could involve using copyrighted content without proper authorization.

- Translation and Localization Services: Adapting content for different languages or cultures risks creating derivative works that infringe on original IP rights.

Bias and Discrimination

Bias is a human flaw that can inadvertently influence the AI systems we create. The consequences of a biased AI system can be far-reaching and potentially catastrophic. Consider the impact a discriminatory AI algorithm might have on credit scoring, real estate valuation, or influencing employment opportunities. When these biases are embedded systematically, the potential for harm increases significantly, affecting individuals and communities on a much larger scale.

Even when AI systems seem unbiased, it's crucial for risk leaders to emphasize transparency and explainability. Ensuring that the decision-making process of AI is clear and understandable is essential. Without transparency and documentation, even well-intentioned companies may face legal challenges and adverse judgments, regardless of the fairness of their data or algorithms. In fact, agencies such as the EEOC, DOJ, FTC, and CFPB have already jointly declared their intent to enforce penalties where AI contributes to unlawful discrimination.

Here are key sectors and applications where bias can have a profound impact:

- HR and Recruiting: AI in recruitment and employee assessments faces scrutiny under the EEOC guidelines to prevent bias, directly affecting individuals' career prospects.

- Healthcare and Medical Diagnostics: Biases in AI can lead to unequal health outcomes, raising significant concerns about patient care across diverse groups.

- Criminal Justice and Law Enforcement: AI tools in predictive policing and sentencing can profoundly influence fairness, especially regarding racial biases.

- Financial Services: This sector, covering everything from credit assessments to insurance underwriting, operates under strict regulations like the Equal Credit Opportunity Act to ensure fairness and prevent discrimination.

- Education: AI in education needs careful oversight to ensure equitable treatment for all students, highlighting its impact on educational access.

- Real Estate: AI in property valuation and listings must comply with the Fair Housing Act, ensuring algorithms do not discriminate and affect housing access.

- Surveillance and Security: AI in facial recognition and security systems must address fairness and privacy, with misidentification posing significant risks for specific demographics.

Model Errors and Performance

AI models stand apart from traditional software due to their self-learning capabilities, which can lead to unpredictable outcomes. Unlike conventional systems with fixed inputs and outputs, AI's dynamic learning process can sometimes result in "hallucinations" or incorrect outputs, as you may have personally observed using technologies like ChatGPT. This unpredictability underscores the need for stringent oversight and robust mitigation strategies.

Interestingly, AI systems are often held to higher standards than humans. While human decision-making is naturally prone to errors, we expect more from automated decisions. Despite AI's potential to outperform human accuracy, any failure, especially those causing financial losses to clients, can erode trust significantly.

While AI product and model errors will impact every AI application, certain industries can be under greater scrutiny due to the high stakes involved—especially where decision-making errors could lead to significant financial losses. Here are examples of sectors that have high AI error risk:

- Healthcare: Errors in AI-driven diagnostics or treatment recommendations can result in misdiagnoses or ineffective treatments, posing health risks and financial liabilities.

- Financial Services: Mistakes in algorithmic trading, credit scoring, or fraud detection can cause incorrect financial advice or undetected fraudulent activities, leading to financial repercussions.

- Automotive and Autonomous Vehicles: Malfunctions in AI for navigation or autonomous driving can cause accidents, property damage, and financial compensation claims.

- Cybersecurity: Errors in AI security systems might miss vulnerabilities, leading to data breaches and substantial financial damages.

- Manufacturing: Mistakes in AI-driven production or quality control can halt production, waste materials, or necessitate recalls, impacting financial and reputational standing.

- Professional Services: For lawyers, accountants, and other professionals with fiduciary responsibilities, the margin for error is minimal. Errors in AI-decision making could lead to breaches of professional standards of care.

AI Regulatory

Until now, our discussion around AI risk has largely been framed by how it fits within existing legal and regulatory structures. However, the landscape is shifting dramatically. The era of AI-specific regulations is on the horizon, signaling a pivotal shift in how AI technologies will be governed moving forward.

With the passage of the AI Act, the European Union has made a decisive move, setting a precedent for future AI regulation, effective from 2026. This act serves as a harbinger for upcoming regulations in the United States, which are expected to be of a similar vein.

While your company may not be directly subject to these new regulations today, their scope and implications are important to understand. Moreover, the principles and best practices embedded within these regulations offer valuable guidance that can benefit you regardless of the legal mandate.

How to manage each category of AI risk

Now that we’ve laid out the risks, let’s look at management strategies for each category.

Cyber and Privacy

AI technologies intensify the need for risk mitigation strategies, though the core practices to safeguard against cyber and privacy risks share some common ground with traditional IT security measures.

Here are some key strategies you can implement:

- Implement rigorous cybersecurity practices that align with SOC 2 Certification requirements.

- Secure sufficient cyber insurance to cover potential breaches.

- Keep meticulous records of data processing activities, particularly those involving automated decision-making, in compliance with GDPR Article 22.

- Conduct Data Protection Assessments for automated processes impacting individuals, detailing consent protocols and opt-out options.

- Adhere to biometric laws by transparently informing users and obtaining explicit consent, with the Illinois BIPA providing the strictest guidelines.

- Ensure third-party chatbot applications comply with laws related to wiretapping and eavesdropping, notably in jurisdictions mandating two-party consent.

IP

While many IP cases are ongoing, there are proactive steps to mitigate IP risks:

- Conduct Thorough IP Audits: Regularly review the data, algorithms, and content in AI applications for potential IP infringements, including the sourcing and licensing of training data and third-party content.

- Implement Clear Licensing Agreements: Ensure all data, software, and content used or generated by AI systems are covered by licensing agreements specifying usage rights, limitations, and IP law compliance.

- Develop IP Indemnification Policies: Negotiate indemnification clauses in contracts with partners and clients to address potential IP litigation costs.

- Seek Expert Legal Advice: Engage with legal professionals specializing in IP law and technology to navigate the legal landscape, stay abreast of regulatory changes, and receive guidance on IP issues.

- Obtain AI Insurance from Vouch: AI Insurance includes coverage for IP related lawsuits, up to your chosen sublimit.

Bias and Discrimination

AI companies can lessen the risk of lawsuits or penalties through a mix of legal, technical, and ethical strategies:

- Diverse Data and Testing: Train AI models with diverse data sets and conduct extensive demographic testing.

- Documenting Decision Processes: Maintain detailed records of AI decision-making processes to demonstrate compliance and due diligence.

- Ethical AI Guidelines: Establish ethical AI guidelines emphasizing fairness, accountability, and transparency

- Regular Audits and Compliance Checks: Perform both internal and external audits of AI systems to help assess compliance and identify risks

- Training and Awareness Programs: Educate development and product management teams on the importance of avoiding bias in AI systems

- Legal Consultation and Review: Collaborate with legal experts familiar with non-discrimination laws and AI applications

- Obtain AI Insurance from Vouch: AI Insurance includes coverage for algorithmic bias lawsuits, up to your chosen sublimit.

Model Errors and Performance

Effective risk management combines technical diligence, legal foresight, and insurance measures. Here are some strategies for mitigating Model Errors:

- Robust Batch Testing: Before deploying AI models, conduct extensive batch testing under varied conditions to identify potential errors. This testing should simulate real-world scenarios as closely as possible to uncover issues that may not be evident in controlled tests.

- Human-in-the-Loop (HITL) Testing: Incorporate human oversight into the AI's lifecycle. Human experts can review AI decisions and outputs periodically, providing an essential check on AI’s autonomous operations and ensuring accuracy and reliability.

- Continuous Monitoring and Feedback Loops: After deployment, continuously monitor AI systems for unexpected behavior or errors. Establish feedback loops that allow users to report issues promptly, enabling quick fixes and updates to prevent widespread impact.

- Stakeholder Engagement: Involve stakeholders, including clients, end-users, and industry experts, in the development process. Their insights can help identify potential issues early on, allowing for adjustments before full-scale deployment.

- Thoughtful Contract Language: Engage legal professionals specializing in technology and intellectual property to navigate legal complexities. Thoughtfully crafted contracts with clear terms regarding AI capabilities and limitations can set the right expectations and provide legal safeguards.

- Product Disclaimers: Use product disclaimers to communicate the potential risks and limitations associated with your AI technology. Such transparency can manage client expectations and may offer an added layer of legal protection.

- AI Insurance: Lastly, securing AI-specific insurance coverage is a critical safety net. It protects against claims arising from AI errors, covering legal fees, settlements, and other related expenses. This insurance acts as a financial buffer, allowing companies to navigate the unpredictable terrain of AI with confidence.

AI Regulatory

To prepare for the EU AI Act and anticipate similar global regulations, AI companies should:

1. Assess your Risk Category: First and foremost, determine your AI application's classification under the global regulations. This categorization will guide the regulatory standards you must meet.

2. For High Risk Applications: Adhere to the specific key requirements outlined in the global regulations, which include:

- Risk Management System: Establish a robust risk management system that covers the entire lifecycle of the AI system, continuously identifying and addressing potential risks.

- Data Governance and Quality Management: Implement data governance practices that ensure data quality, accuracy, and representativeness. Include measures to mitigate bias and ensure secure data handling.

- Transparency Measures: Provide clear information to users, including the system's capabilities and limitations, ensuring users understand how AI decisions are made.

- Human Oversight: Incorporate human oversight mechanisms, such as human-in-the-loop, to review and verify AI outputs and intervene when necessary.

- Accuracy, Robustness, and Cybersecurity: Ensure the AI system is accurate, robust against manipulation, and incorporates cybersecurity measures to protect against attacks and data breaches

3. Stay Involved: Engage with stakeholders, including legal experts, regulatory bodies, and civil society groups, to stay updated on regulatory developments and community standards. This collaboration can inform your compliance strategy and highlight best practices.

AI risk management frameworks

- Briefly discuss what AI risk management frameworks are

- Explain what AI risk management frameworks are used for

- For the following frameworks, give a brief description and what they’re most known for:some text

- The NIST AI Risk Management Framework

- The EU AI ACT

- ISO/IEC standards

Conclusion: Embracing the AI Revolution with Preparedness

As we stand on the cusp of a new era, it's undeniable that artificial intelligence will profoundly transform the way we conduct business. Whether you view these changes with boundless optimism or cautious skepticism, AI's influence is burgeoning, and we are just witnessing the dawn of its potential.

The rise of AI has already catalyzed the launch of countless startups, each with a vision of revolutionizing their respective industries. These innovations promise efficiency, growth, and solutions to complex problems that have long challenged us. Yet, as history teaches us, change brings new risks as much as opportunities.

As the insurer of over 500 AI companies, we're deeply invested in the collective journey toward a future where risks are understood, managed, and mitigated. We hope that the insights here will help you chart a course through the AI landscape with confidence.

Learn more about Vouch's AI coverage.